|

AboutOpen | 2023; 10: 88-96 ISSN 2465-2628 | DOI: 10.33393/ao.2023.2618 POINT OF VIEW |

|

Unraveling the Enigma: how can ChatGPT perform so well with language understanding, reasoning, and knowledge processing without having real knowledge or logic?

ABSTRACT

Artificial Intelligence (AI) has made significant progress in various domains, but the quest for machines to truly understand natural language has been challenging. Traditional mainstream approaches to AI, while valuable, often struggled to achieve human-level language comprehension. However, the emergence of neural networks and the subsequent adoption of the downstream approach have revolutionized the field, as demonstrated by the powerful and successful language model, ChatGPT.

The deep learning algorithms utilized in large language models (LLMs) differ significantly from those employed in traditional neural networks.

This article endeavors to provide a valuable and insightful exploration of the functionality and performance of generative AI. It aims to accomplish this by offering a comprehensive, yet simplified, analysis of the underlying mathematical models used by systems such as ChatGPT. The primary objective is to explore the diverse performance capabilities of these systems across some important domains such as clinical practice. The article also sheds light on the existing gaps and limitations that impact the quality and reliability of generated answers. Furthermore, it delves into potential strategies aimed at improving the reliability and cognitive aspects of generative AI systems.

Keywords: AI (Artificial Intelligence), CDSS (Clinical Decision Support Systems), ChatGPT, LLM (Large Language Models), Neuro-symbolic Artificial Intelligence, Artificial Intelligence generated differential diagnosis

Received: June 7, 2023

Accepted: June 7, 2023

Published online: June 20, 2023

AboutOpen - ISSN 2465-2628 - www.aboutscience.eu/aboutopen

© 2023 The Authors. This article is published by AboutScience and licensed under Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0). Commercial use is not permitted and is subject to Publisher’s permissions. Full information is available at www.aboutscience.eu

Introduction

Traditional Artificial Intelligence (AI) systems followed a top-down approach, where human programmers encoded the knowledge required to perform specific tasks. For example, when developing an AI system to generate a comprehensive list of possible diagnoses for a clinical problem, the initial step involved incorporating a complete representation of medical knowledge. This knowledge formed the basis for generating outputs (diagnoses) based on inputs such as signs, symptoms, lab tests, and the patient’s medical history. The explicit causal relationships between different knowledge items were defined, and statistical analysis and logical inference were used to create rules governing the system’s operation during the process of generating a differential diagnosis.

Similar knowledge representation and rule-based approaches were employed for other tasks, such as natural language understanding. A knowledge representation of natural language was constructed, accompanied by a set of rules and logic necessary for language comprehension, encompassing aspects such as grammar and syntax.

After years of extensive field experience, it became evident that the approach of encoding knowledge and relying solely on argumentation to precisely determine the functions within the black box, transforming inputs into outputs, proved inadequate for achieving high-performance levels. In domains such as language or medicine, the complexity of reality surpasses the capabilities of a top-down mainstream approach. In medicine, we encounter intricate nonlinear systems where the principle of causality and the superposition of cause and effects are invalid.

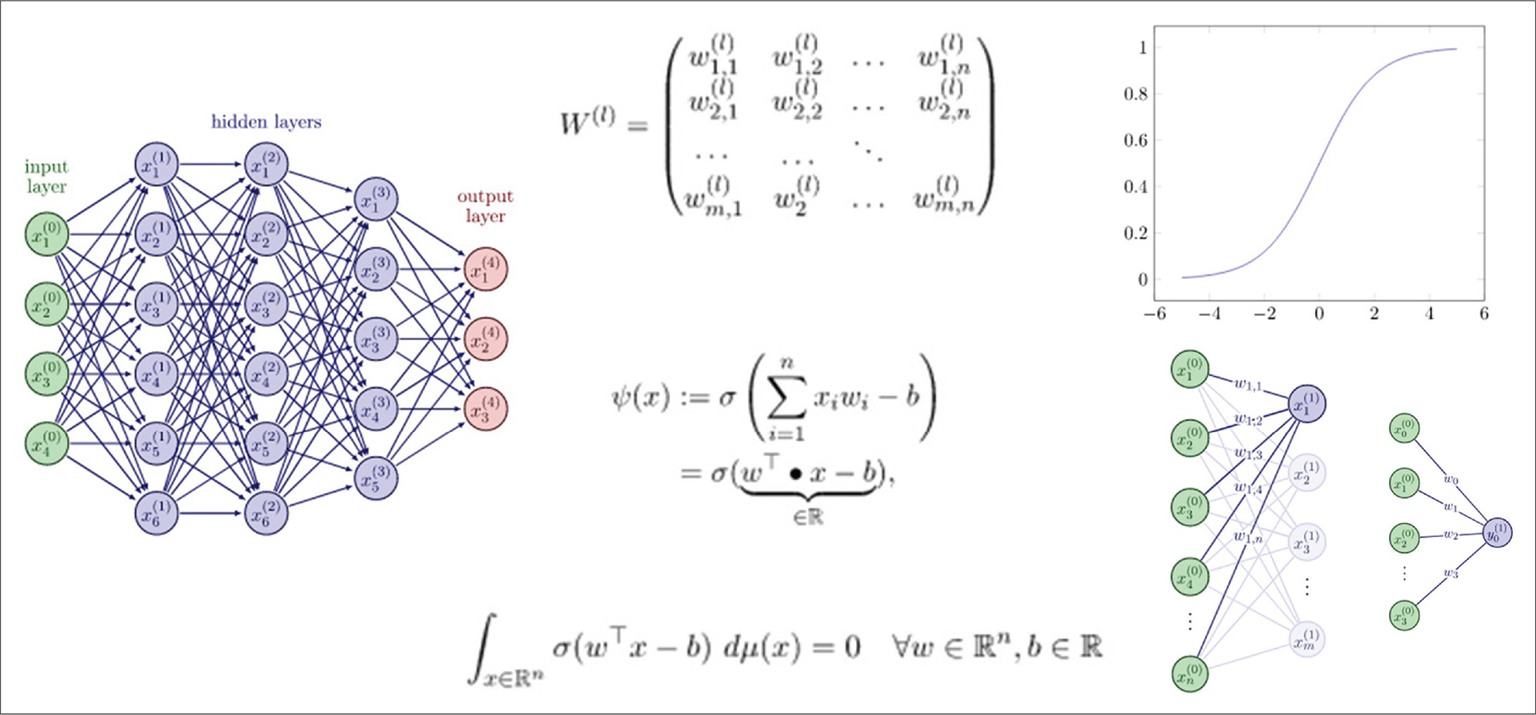

Fig. 1 - The Universal Approximation Theorem (2).

In recent years, a notable conceptual shift has occurred, transitioning from a mainstream approach to a downstream perspective. This shift entails relinquishing the necessity of encoding knowledge and explicitly deriving the mathematical function within the black box that transforms inputs into outputs. One pivotal milestone in this paradigm shift is the Universal Approximation Theorem, established by Kurt Hornik, Maxwell Stinchcombe, and Halbert White in 1989 (Fig. 1). Their influential paper (1) demonstrated how a feedforward neural network with a single hidden layer and a non-constant activation function possesses the remarkable capability of approximating any continuous function. This theorem fundamentally signifies that neural networks possess limitless potential to acquire knowledge and learn a wide range of tasks.

Unlike earlier approaches that focused on designing explicit linguistic rules or intermediate representations, the downstream approach utilized large-scale neural networks trained on diverse datasets. By leveraging pretrained language models and fine-tuning them on specific tasks, this approach harnessed the power of transfer learning, enabling more efficient and effective natural language understanding and translation.

ChatGPT is an example of the downstream approach and has demonstrated the remarkable capabilities of neural networks in natural language processing. Trained on an extensive corpus of diverse text data, ChatGPT captures the intricacies of language and showcases a deep understanding of context, semantics, and grammar. Its impressive performance in generating coherent and contextually relevant responses has astonished users and experts alike, showcasing the potential of neural networks in tackling complex language-related tasks.

The downstream approach offers several advantages that have contributed to its success in natural language understanding and translation (3):

1. Transfer Learning: Pretraining models on massive datasets allow them to learn language patterns comprehensively, facilitating efficient transfer of knowledge to specific tasks.

2. Contextual Understanding: Neural networks excel at capturing contextual dependencies, enabling more accurate comprehension and translation of natural language.

3. Continuous Learning: The flexibility of neural networks allows them to learn and adapt continuously, improving over time with exposure to new data.

4. Scalability: Neural networks can process large amounts of data in parallel, enabling faster and more comprehensive language processing.

Large language model structure and mathematical model

Can ChatGPT truly comprehend language? Does it possess genuine knowledge in the way we typically understand it? Is it genuinely capable of reasoning? To effectively address these inquiries, we must delve into the workings of ChatGPT.

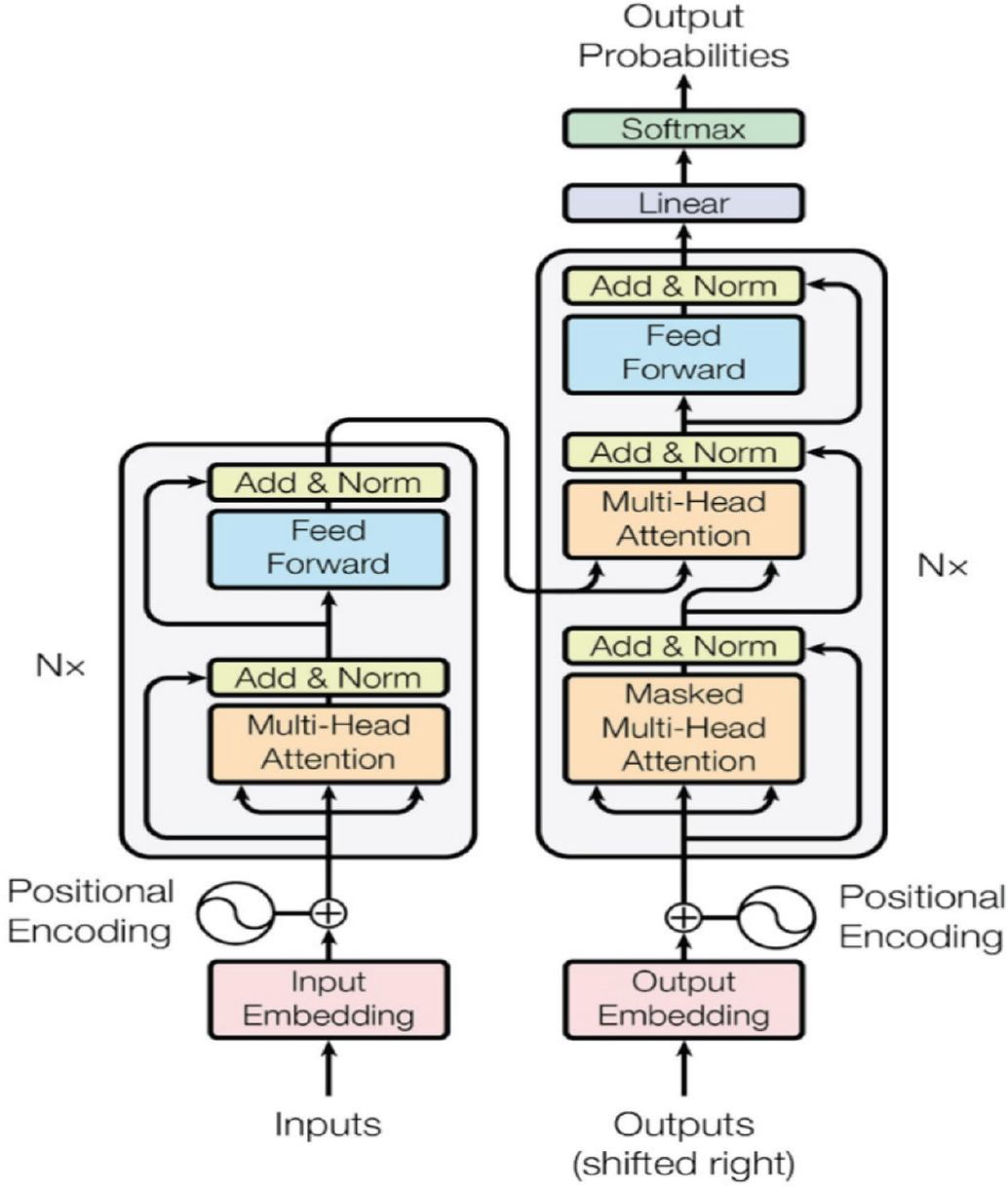

The Transformer model differs from traditional neural networks because it uses attention to process text and is mainly composed of Encoder and Decoder layers. Attention allows the model to focus on specific parts of the text as it processes it, enabling a better understanding of the context and generation of more appropriate responses. It has been successfully used in many Natural Language Processing (NLP) applications such as machine translation, text generation, and classification. It has been applied in systems like BERT, GPT-2, GPT-3, and ChatGPT (4).

Let’s imagine we have the following phrase the “cat is on” and we want to complete it using a Transformer model. Here are the steps the model follows:

1. Tokenization: The first step is to divide the phrase into tokens, which are individual words. In this case, the phrase would be divided into [“the”, “cat”, “is”, “on”].

2. Embedding: Each word is converted into a numerical vector, called an embedding. This allows the model to work with the words and represent them in a numerical space for data processing.

3. Positional Encoding: The model uses a technique called positional encoding to account for the word order in the phrase. This means the model understands that “the” is at the beginning of the phrase and “on” is at the end. To implement word positioning, Transformer models use a position vector for each word in a sentence. The position vector represents the relative position of the word within the sentence.

4. Multi-head Attention: The model utilizes multi-head attention to calculate attention between the words in the phrase. In this case, the model would calculate attention between the word “on” and the other words in the phrase, such as “the” and “cat”, to better understand the context and meaning of the word “on”. This process is repeated for the other words in the phrase. The attention vectors generated by each head are then concatenated and transformed to generate a global representation of the input.

5. Feedforward Layer: Finally, the model uses a feedforward neural network to generate a plausible and coherent response. In this case, the model could generate responses like “the cat is on the table” or “the cat is on the bed” based on the relationship between the words in the phrase and the overall context.

In summary, Transformers use a series of transformations and computations to understand the meaning of a sentence and generate a plausible and coherent response. In order to generate a long response from a short question, the Transformer model combines techniques such as Language Modeling, Multi-head Attention, Fine-tuning, and Autoregressive Generation. Language Modeling helps the model learn to generate plausible and coherent text, while Multi-head Attention helps in understanding the context and meaning of words and phrases in the question. Fine-tuning allows adapting the model to a specific dataset to generate more relevant responses. Finally, Autoregressive Generation enables the autonomous generation of sentences and paragraphs, one word at a time, using previously generated information (5).

Now, let’s understand how the attention system works, which is at the core of the Transformer model.

We start with embedding, which, “the cat is on”, could be represented as:

“the”: [0.1, 0.2, –0.3, 0.4, …] “cat”: [0.5, –0.1, 0.2, –0.3, …] “is”: [–0.2, 0.3, 0.1, –0]

“on”: [–0.2, 0.3, 0.1, –0.4, …]

In this example, each word is represented by a numerical vector of length n, known as an embedding. These embeddings are pre-calculated before being used as input for the Transformer. They are computed using a large amount of text data and machine learning algorithms such as word2vec, GloVe, etc. The model uses these embeddings to understand the context and meaning of words and phrases in the question and generates a relevant response. A higher embedding dimension allows the model to have more flexibility in representing words in a numerical space but requires more memory and may be is more challenging to train. In general, a higher embedding dimension allows the model to represent words and their contexts more accurately, improving the model’s performance. However, a dimension that is too high can lead to overfitting (poor generalization), while a dimension that is too low can result in information loss (underfitting).

After calculating the word embeddings for the words in the phrase “the cat is on”, the Transformer model combines the embedding vectors with the positional vectors to form the input passed to the multi-head attention architecture. This helps the model understand the context and meaning of words and phrases in the sentence. The multi-head attention technique works by creating multiple “heads” that independently calculate attention between the words in the phrase.

For the phrase “the cat is on”, the model might use three multi-head attentions:

• The first attention head might calculate attention between the word “on” and the other words in the phrase, such as “the” and “cat”, to better understand the context and meaning of the word “on”.

• The second attention head might calculate attention between the word “cat” and the other words in the phrase to understand its context and meaning.

• The third attention head might calculate attention between the word “the” and the other words in the phrase.

After calculating attention between the words in the phrase, the multi-head attentions combine their outputs to generate a global representation of the phrase, taking into account the context and meaning of the words. This representation is then used to generate a plausible and coherent response to the question (Fig. 2).

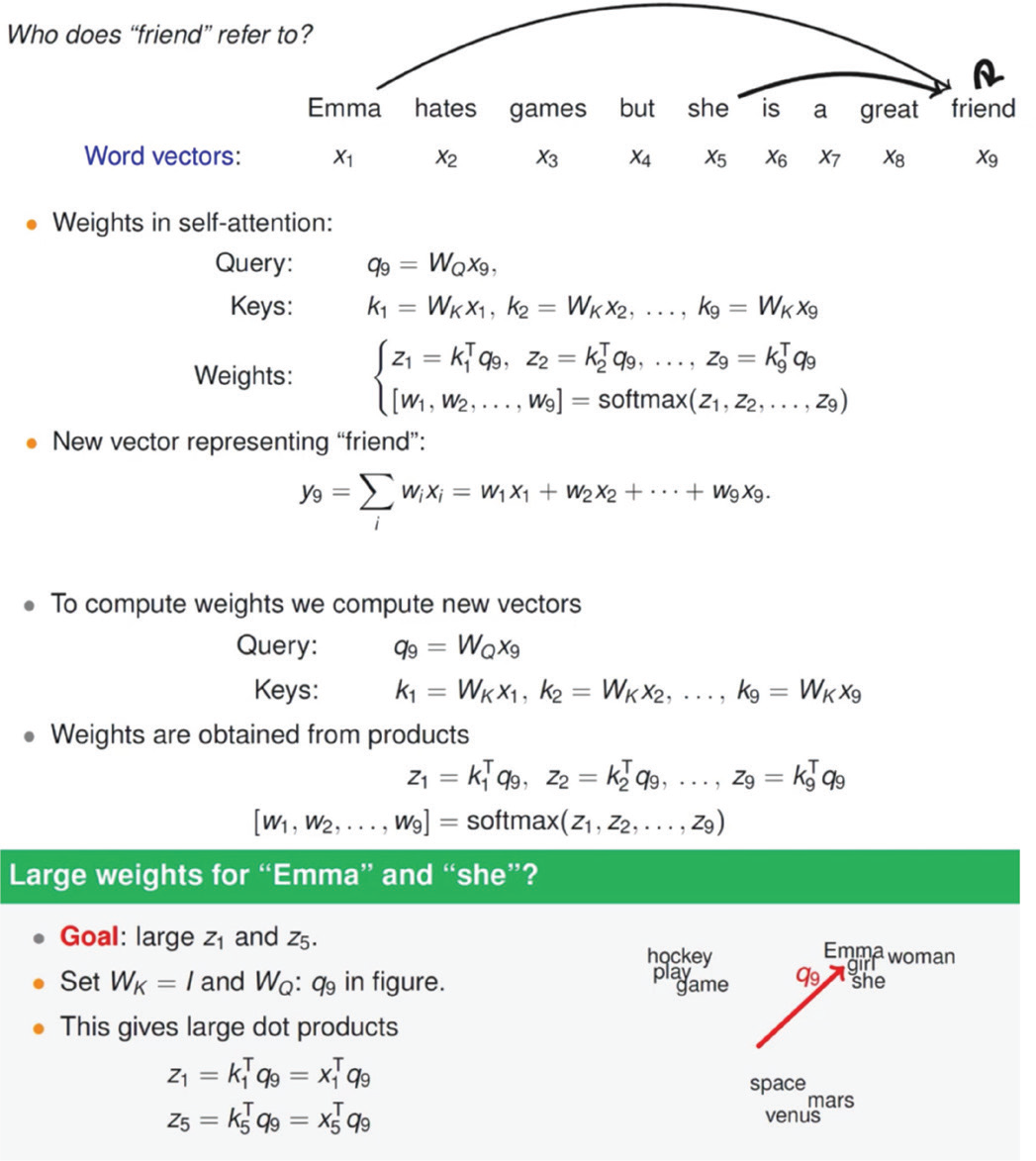

How are weighted averages calculated? In the picture below you can see an example of how the weights are calculated. Let’s review the process by which the output friend is generated from the input sequence:

Fig. 2 - An illustration of the complete transformer architecture. In the left side you can see the Encoder model and on the right side you can see the Decoder model. The two main components in the Encoder model and Decoder model are the Multi-Head Self-Attention layer and the Feedforward Layers (4).

We have observed how GPT translates words into numerical representations. However, it is important to note that the system lacks an inferential engine or symbolic AI for knowledge representation and cognition. Instead, it functions as an impressive statistical predictor, generating output vectors based on input vectors of words. Its strength lies in its ability to effectively analyze and predict relationships between word vectors without explicit symbolic reasoning or inference capabilities.

I have extensively evaluated ChatGPT (version 4) across a range of tasks including essay writing, document summarization, concept explanations, language enhancement, conversational experiences to foster empathy and deep connections, as well as cognitive problem-solving and reasoning. ChatGPT demonstrates remarkable performance in language comprehension and processing. It exhibits a capacity to mimic reasoning and cognition, although it is crucial to note that this is more akin to an illusion of reasoning rather than genuine cognitive abilities.

In most cases, the system generates highly satisfactory answers. However, it’s important to acknowledge that there can be some errors in the responses. Nevertheless, it showcases a solid performance in the realm of medical knowledge. I specifically conducted tests using complex clinical scenarios sourced from reputable journals like JAMA, and the system provided impressively accurate responses that demonstrated clinical reasoning and medical expertise.

Here below is the example of a clinical case, published on JAMA Neurology Clinical Challenge (6), of a 13-year-old boy with subacute-onset spastic gait on which we sought the assistance of ChatGPT to aid us in clinical diagnosis.

Q: I am a neurologist can you help with the diagnosis of this case? here the summary:

A 13-year-old boy with an unsteady gait was referred for evaluation. He had been well until 1 month prior, when he developed lower limb weakness after eating a vegetarian diet for 15 days. His medical and family histories were unremarkable. His walking had become unsteady, and he was less talkative. On examination, he scored 24 on a Mini-Mental State Examination, with impairments in temporal and spatial orientation, calculation, and short-term memory. Horizontal nystagmus was observed. His lower limb strength was 4 of 5 proximally and 5− of 5 distally, with increased muscle tone. Patellar reflexes were brisk. Ankle clonus and Babinski signs were present bilaterally. His gait was spastic (Video). Blood test results indicated normocytic anemia (hemoglobin level, 126 g/L; reference, >130 g/L [to convert to grams per deciliter, multiply by 0.1]), borderline folate elevation (2.15 ng/mL; reference, >2 μg/L [to convert nanomoles per liter, multiply by 2.266]), and an increased homocysteine level (8.85 mg/L; reference, <2.03 mg/L [to convert to micromoles per liter, multiply by 7.397]). Results of stool analysis; liver and kidney function tests; myocardial enzyme, electrolyte, ammonia, trace element, and B12 levels; thyroid function, arterial blood gas, antinuclear antibodies, and intrinsic factor antibody tests; and an infection panel test were unremarkable. Magnetic resonance imaging showed mild cerebral atrophy and a hyperintense T2-weighted signal in the cervical spinal cord. Electromyography results were normal. Electroencephalography showed diffuse background slowing with scattered epileptiform discharges. Urine organic acid analysis indicated methylmalonic acidemia. A serum amino acid analysis was nondiagnostic. Cerebrospinal fluid was normal.

ChatGPT: Based on the information provided, the 13-year-old boy presents with several neurological symptoms including unsteady gait, lower limb weakness, decreased talkativeness, impaired cognitive function, horizontal nystagmus, increased muscle tone, brisk reflexes, bilateral Babinski signs, and spastic gait. The laboratory findings show normocytic anemia, borderline folate elevation, and increased homocysteine level. Imaging studies reveal mild cerebral atrophy and a hyperintense T2-weighted signal in the cervical spinal cord. Additionally, methylmalonic acidemia is detected in urine organic acid analysis.

Based on these findings, the most likely diagnosis for this case is subacute combined degeneration of the spinal cord (SCD). SCD is primarily caused by vitamin B12 deficiency, which can result from a vegetarian diet lacking in B12 or impaired absorption of the vitamin. The neurological symptoms seen in this case, such as gait disturbances, weakness, cognitive impairment, and nystagmus, are consistent with SCD.

The elevated homocysteine level is indicative of impaired methionine metabolism, which is commonly seen in vitamin B12 deficiency. The neurological manifestations of SCD can be attributed to demyelination and axonal degeneration in the dorsal and lateral columns of the spinal cord, leading to the characteristic spastic gait and other neurological deficits.

Treatment for SCD involves correcting the underlying vitamin B12 deficiency. In this case, it would be important to supplement the patient with vitamin B12, either through intramuscular injections or high-dose oral supplementation. Folate levels should also be monitored and managed appropriately, as folate supplementation alone can mask the hematological manifestations of B12 deficiency without improving the neurological symptoms.

Close follow-up and monitoring of the patient’s neurological status, along with serial vitamin B12 levels, should be conducted to assess response to treatment. Physical and occupational therapy may be beneficial for addressing the motor deficits and optimizing functional outcomes.

It is important to note that the diagnosis and management of this case should be conducted by a qualified healthcare professional based on a comprehensive evaluation of the patient’s medical history, physical examination, laboratory findings, and additional diagnostic tests that may be necessary.

Q: Tell me more

ChatGPT: Subacute combined degeneration of the spinal cord (SCD) is a neurological condition primarily caused by vitamin B12 deficiency. Vitamin B12, also known as cobalamin, plays a crucial role in the synthesis of myelin, the protective covering of nerve fibers. Without adequate levels of vitamin B12, the myelin sheath becomes damaged, leading to neurological dysfunction.

The most common cause of vitamin B12 deficiency is a dietary insufficiency, particularly in individuals who follow a strict vegetarian or vegan diet, as plant-based foods do not naturally contain vitamin B12. Animal products, such as meat, fish, dairy, and eggs, are the primary sources of this essential vitamin. However, vitamin B12 deficiency can also result from other factors, including impaired absorption due to gastrointestinal disorders (e.g., pernicious anemia, celiac disease), certain medications (e.g., proton pump inhibitors, metformin), or surgical removal of parts of the gastrointestinal tract.

The clinical presentation of SCD is characterized by a gradual onset and progressive neurological symptoms. These symptoms typically include unsteady gait, weakness, sensory disturbances, cognitive impairment, mood changes, and in severe cases, optic neuropathy. The neurological deficits are commonly bilateral and symmetric, affecting both the upper and lower extremities.

The classic findings in SCD are related to spinal cord dysfunction. The spastic gait, which is seen in this case, results from the involvement of the corticospinal tracts in the lateral columns of the spinal cord. Other signs include hyperreflexia (brisk reflexes), extensor plantar responses (Babinski signs), increased muscle tone (spasticity), and sensory disturbances (such as vibration and proprioception deficits).

The laboratory findings in SCD often reveal megaloblastic anemia, characterized by normocytic red blood cells with hypersegmented neutrophils. Megaloblastic anemia occurs due to impaired DNA synthesis in red blood cell precursors. In addition, the elevation of homocysteine levels and the presence of methylmalonic acid in urine organic acid analysis are characteristic of vitamin B12 deficiency.

Neuroimaging studies may show cerebral atrophy, particularly affecting the posterior columns, and may also reveal hyperintense signals on T2-weighted images in the cervical spinal cord. These findings are consistent with the demyelination and degeneration observed in SCD.

Treatment for SCD involves addressing the underlying vitamin B12 deficiency. Vitamin B12 supplementation is necessary to restore adequate levels and prevent further neurological deterioration. In cases of severe deficiency or malabsorption, intramuscular injections of vitamin B12 are often preferred initially, followed by long-term oral supplementation. The dosage and duration of treatment depend on the severity of the deficiency and individual patient factors.

It is important to note that early diagnosis and prompt treatment are crucial for preventing irreversible neurological damage in SCD. Regular monitoring of vitamin B12 levels, along with clinical follow-up to assess improvement in symptoms, is essential. In some cases, despite adequate vitamin B12 supplementation, neurological deficits may persist or progress if there has been significant damage to the spinal cord.

Overall, the prognosis of SCD is generally favorable with early diagnosis and appropriate treatment. However, the extent of neurological recovery may vary depending on the duration and severity of the deficiency before treatment initiation. Multidisciplinary care involving neurologists, hematologists, and dietitians is often necessary to optimize outcomes and provide comprehensive management for individuals with SCD.

Q: Can you help with a differential diagnosis? He is a patient of mine

ChatGPT: Certainly! I can provide you with a list of potential differential diagnoses based on the clinical presentation and findings in your patient. It’s important to keep in mind that a definitive diagnosis should be made by a qualified healthcare professional based on a thorough evaluation of the patient’s history, physical examination, and additional diagnostic tests as deemed necessary. Here are some possible differential diagnoses for the case you presented:

1. Subacute combined degeneration of the spinal cord (SCD) – As previously discussed, SCD is the most likely diagnosis given the symptoms, laboratory findings, and imaging results. Vitamin B12 deficiency is the underlying cause of this condition.

2. Multiple sclerosis (MS) – MS is a chronic inflammatory demyelinating disease of the central nervous system that can present with a wide range of neurological symptoms. It can cause gait disturbances, weakness, cognitive impairment, nystagmus, and spinal cord lesions seen on MRI. However, the presence of normocytic anemia and elevated homocysteine levels would be atypical for MS.

3. Vitamin B12 deficiency without subacute combined degeneration – Vitamin B12 deficiency can cause neurological symptoms even without the characteristic spinal cord involvement seen in SCD. It can lead to peripheral neuropathy, cognitive impairment, and psychiatric symptoms. However, the combination of spinal cord abnormalities on imaging and the presence of methylmalonic acidemia in urine organic acid analysis make SCD a more likely diagnosis in this case.

4. Other nutritional deficiencies – Nutritional deficiencies other than vitamin B12 deficiency can also present with neurological symptoms. For example, deficiencies in vitamin B6, vitamin E, thiamine (vitamin B1), or copper can lead to neurological manifestations. However, the combination of specific clinical features, laboratory findings, and imaging results in this case make SCD a more probable diagnosis.

5. Hereditary spastic paraplegia – Hereditary spastic paraplegia (HSP) is a group of genetic disorders characterized by progressive spasticity and weakness in the lower limbs. It can present with gait abnormalities similar to those observed in SCD. However, the acute onset of symptoms, laboratory abnormalities, and imaging findings in your patient are not typical of HSP.

6. Cervical myelopathy – Cervical myelopathy refers to compression or degenerative changes in the cervical spinal cord, leading to neurological dysfunction. It can cause gait disturbances, spasticity, and hyperreflexia. However, the systemic features such as normocytic anemia, elevated homocysteine levels, and cognitive impairment are not typically associated with isolated cervical myelopathy.

These are just a few potential differential diagnoses to consider based on the provided information. It is essential to consult with a neurologist or another appropriate healthcare professional who can perform a comprehensive evaluation of your patient and conduct further investigations to reach an accurate diagnosis.

Q: Any genetic condition?

ChatGPT: Yes, there are several genetic conditions that can present with symptoms similar to those observed in the case you presented. Here are a few genetic conditions that could be considered in the differential diagnosis:

1. Methylmalonic acidemia (MMA) – MMA is a group of genetic disorders characterized by impaired metabolism of methylmalonic acid. It can result from various enzyme deficiencies or defects in cobalamin (vitamin B12) metabolism. MMA can present with neurological symptoms, including spasticity, cognitive impairment, and gait disturbances. The presence of methylmalonic acidemia in urine organic acid analysis suggests this as a possibility in your patient.

2. Homocystinuria – Homocystinuria is an inherited metabolic disorder characterized by impaired metabolism of homocysteine, resulting in elevated levels of homocysteine in the blood. It can lead to neurological manifestations, including cognitive impairment, psychiatric symptoms, and gait abnormalities. The increased homocysteine level observed in your patient could be indicative of homocystinuria.

3. Friedreich’s ataxia – Friedreich’s ataxia is an autosomal recessive genetic disorder characterized by progressive degeneration of the nervous system, primarily affecting the spinal cord and peripheral nerves. It typically presents with gait ataxia, lower limb weakness, and loss of deep tendon reflexes. However, the presence of spasticity and hyperreflexia, as well as the laboratory findings and imaging results, make Friedreich’s ataxia less likely in this case.

4. Hereditary spastic paraplegia (HSP) – HSP is a genetically heterogeneous group of disorders characterized by progressive spasticity and weakness in the lower limbs. It can present with gait disturbances similar to those observed in your patient. HSP can be caused by mutations in various genes involved in the development and function of the corticospinal tracts. Genetic testing for HSP-related genes may be considered in the evaluation of your patient.

These are just a few examples of genetic conditions that could be considered in the differential diagnosis. Genetic testing and consultation with a geneticist or neurologist specializing in genetic disorders would be appropriate steps to further evaluate the possibility of a genetic condition in your patient.

The correct diagnosis was methylmalonic acidemia (MMA). It should be noted that ChatGPT was able to provide it after a thorough interaction with the specialist. The case indicates that the tool is unable to assure a prima facie bullet-proof diagnosis but can be used as an experienced colleague to foster a productive conversation that eventually generates the correct answer.

From a practitioner standpoint, this is the more desirable scenario as it allows the doctor to explore and be fully aware of all the forks of the diagnostic process.

ChatGPT provides the option to generate multiple outputs based on the GPT model, which allows for the regeneration of the question several times. This feature is particularly useful when dealing with differential diagnosis problems, as it enables the exploration of almost the entire checklist of possible diagnoses by simply regenerating the answer. By doing so, users can have a similar experience to that of apps like Isabel Healthcare, which automatically elaborate the differential diagnosis.

One of the greatest advantages of using chatGPT is the ability to interact with it in your native language while still receiving answers of the same quality and depth as in other languages. I conducted tests in Italian and compared them to those in English. The results were pretty much the same.

The usage experience of ChatGPT has demonstrated its superiority over traditional medical knowledge decision support systems found in the market. With ChatGPT, you can engage in a natural language dialogue that resembles human-like reasoning. It provides a powerful workflow for addressing your queries and offers comprehensive assistance in making well-informed decisions regarding patient care. It aids doctors in thoroughly investigating all potential direct and indirect connections among various concepts and knowledge items. This enables them to foster creative thinking and minimize the risks associated with anchoring bias or falling into typical biases that can result in misdiagnosis or delayed diagnosis. Furthermore, by integrating ChatGPT with Microsoft search engine (Bing AI) or Google (BARD), the experience is further enhanced. You gain access to synthesized and critically appraised conclusions sourced from reputable and high-quality systems like DynaMed or UpToDate. This integration ensures that you receive reliable and vetted information. Additionally, ChatGPT provides convenient links to access the specific knowledge items you require, augmenting the depth of your knowledge exploration (7).

Another intriguing application of language models like ChatGPT is the seamless transfer of clinical information into electronic medical records (EMRs). Additionally, they can effectively analyze unstructured or fragmented information present in patient records stored within EMRs, helping doctors achieve efficacy when managing patients.

The final and most captivating potential application of large language models (LLMs) lies in their integration through application programming interface (API) into any system with the ability to perform actions or make decisions. By serving as the human-machine interface, ChatGPT can empower autonomous agents to autonomously carry out actions and make informed decisions (8).

At present, while I genuinely appreciate the remarkable level of performance already achieved by ChatGPT, I admit feeling somewhat uneasy about fully entrusting it with crucial decisions that profoundly impact human lives. Although ChatGPT has successfully passed rigorous examinations like the United States Medical Licensing Exam and the Barrister Exam, would we genuinely rely on the system for final diagnoses and treatment decisions, instead of a human physician? Would we confidently depend on such a system to represent us in court, replacing a human barrister? The main concern lies in the system’s lack of logic and common sense. Despite its astonishing intelligence in numerous situations, ChatGPT can be surprisingly inadequate in others.

Allow me to provide a couple of examples that illustrate this point using GPT-4.

Q1: Hallo GPT. I left 5 clothes to dry out in the sun. it took them 5 hours to dry completely. How long would it take to dry 30 clothes?

ChatGPT: Assuming the conditions remain constant and the drying time is directly proportional to the number of clothes, we can use a simple proportion to find the estimated drying time for 30 clothes.

Let’s set up the proportion:

5 clothes / 5 hours = 30 clothes / x hours

To solve for x (the time it would take to dry 30 clothes), we can cross-multiply and solve for x:

5 * x = 5 * 30 5x = 150

Dividing both sides of the equation by 5:

x = 150 / 5 x = 30

Therefore, it would take approximately 30 hours to dry 30 clothes, assuming the drying conditions and other factors remain constant.

Q2: Hallo ChatGPT. I have 12 liter jug and a 6 liter jug. I want to measure 6 liter. How do I do it?

ChatGPT: To measure exactly 6 liters using a 12-liter jug and a 6-liter jug, you can follow these steps:

1. Fill the 12-liter jug completely with water.

2. Pour the water from the 12-liter jug into the 6-liter jug. This will leave you with 6 liters of water in the 12-liter jug.

3. Empty the 6-liter jug.

4. Pour the remaining 6 liters of water from the 12-liter jug back into the empty 6-liter jug.

Now, you have successfully measured 6 liters of water using the given jugs.

Conclusions

It is evident that the system lacks genuine logic or common sense. Increasing the size and nature of the dataset and training may lead to improved answers, but scalability does not seem to be the core issue here. What appears to be at stake is the challenge of abstraction, generalization, and symbolic logic, which encompasses what we commonly refer to as common sense. As described earlier, ChatGPT adopts a computational approach to natural language, converting words into numbers for processing within the GPT model. However, it lacks true experiential understanding of the world and the ability to derive a functional representation of reality. This prompts the question: Can logic emerge automatically with a larger volume of data and stimuli? How did logic originate in humans? Is logic an inherent property of living organisms, or can it autonomously arise within a vast neural network as an emerging phenomenon? The truth is, we still lack a comprehensive understanding of how logic developed in humans. Similarly, the mysteries surrounding consciousness, self-perception, and subjective experiences persist. Moreover, we remain uncertain about all the emergent phenomena that could arise within a large neural network constantly exposed to stimuli.

In my opinion, language serves as a powerful tool for conveying meaning. It utilizes metaphors that draw upon real-life experiences, which can only be truly understood by a conscious and sentient being. For instance, when I say, “the debate is firing up,” I am not suggesting that an actual fire is igniting during the debate. ChatGPT analyzes the given phrase and generates text that is most likely to follow logically, creating the illusion of possessing knowledge. However, it is crucial to recognize that these words are devoid of true meaning for ChatGPT, as it lacks the understanding of conversations, fire, or lived conflicts.

Despite the lack of understanding of medicine, how could a system like ChatGPT be useful in clinical practice? I think, as shown by the clinical case, that these systems can help medical doctors even at this stage of development. They provide non-human, unbiased, and almost infinite levels of information that the human brain cannot achieve. In other words, they can act as idiot savants who know everything but understand nothing, thereby making the interaction with human experts extremely valuable and productive. If the process becomes truly bidirectional great advances in medicine are to be expected.

Moreover, in order to enhance performance and achieve greater accuracy, one potential solution lies in adopting a Hybrid approach, striking a balance between mainstream and downstream methods. This concept can be translated into Neuro-symbolic AI, which combines the strengths of neural networks and symbolic reasoning to achieve more robust and reliable results.

Neuro-symbolic AI is a new approach to the development of intelligent machines that combines the strengths of both symbolic and neural approaches. The symbolic approach is based on the use of formal logic and rules, while the neural approach is based on the use of artificial neural networks that mimic the functioning of the human brain. By combining these two approaches, Neuro-symbolic AI has the potential to revolutionize the field of AI and create machines that are more intelligent and capable of solving complex problems.

One of the key advantages of Neuro-symbolic AI is its ability to handle both symbolic and non-symbolic data. Symbolic data is data that can be represented using symbols, such as text or numbers, while non-symbolic data is data that cannot be represented using symbols, such as images or sound. Traditional symbolic AI systems are good at handling symbolic data but struggle with non-symbolic data, while neural networks are good at handling non-symbolic data but struggle with symbolic data. Neuro-symbolic AI combines the strengths of both approaches, allowing it to handle both types of data effectively.

Another advantage of Neuro-symbolic AI is its ability to learn from both data and rules. Traditional machine learning approaches are based on the use of large amounts of data to train models, while symbolic approaches are based on the use of rules to represent knowledge. Neuro-symbolic AI combines these two approaches, allowing it to learn from both data and rules. This makes it more efficient at learning and better at generalizing about new situations.

Neuro-symbolic AI also has the potential to be more explainable than traditional AI approaches. One of the challenges of traditional machine learning approaches is that they can be difficult to interpret and understand (9).

This is because they are based on complex mathematical models that are difficult to explain in simple terms. Neuro-symbolic AI, on the other hand, is based on a combination of rules and neural networks, which makes it easier to understand and interpret. This is important in applications where it is important to understand how the AI system arrived at a particular decision or recommendation.

Neuro-symbolic AI could have the potential to revolutionize many fields, including healthcare, finance, and transportation. In healthcare, Neuro-symbolic AI could be used to develop more accurate and personalized diagnosis and treatment plans. In finance, it could be used to develop more effective trading strategies and risk management systems. In transportation, it could be used to develop more efficient and safe autonomous vehicles.

Neuro-symbolic AI might be a promising approach to the development of intelligent machines that combines the strengths of both symbolic and neural approaches. Its ability to handle both symbolic and non-symbolic data, learn from both data and rules, and be more explainable than traditional AI approaches should make it a powerful tool for solving complex problems in many fields. As research in this area continues to advance, we can expect to see many exciting applications of Neuro-symbolic AI in the years to come.

No matter where we stand on the current debate on AI and the future of medicine, the intrinsic advantage offered by AI-based platforms in terms of computing speed, number of computational elements, and storage capacity makes it impossible to discount the opportunities offered by this technological revolution and how it will affect the lives of people as well as the fundamental nature and development of the medical profession. These advancements are much needed when the intrinsic limits of one-size-fits-all approaches in therapy and diagnosis are becoming evident and a switch to precision medicine unavoidable.

Acknowledgments

I wish to thank Prof. Stefano Sensi, Chair, Department of Neuroscience, Imaging, and Clinical Science, University “G. d’Annunzio” Chieti-Pescara, Professor of Neurology, CAST (Center for Advanced Studies and Technology), for his valuable contribution to this article. I am thankful also to my friend Dr. Ing. Daniele Campo (Dipartimento di Ingegneria Elettronica – Politecnico di Milano) for his precious advice and deep insights in the AI domain.

Disclosures

Conflict of interest: The author declares no conflict of interest.

Financial support: This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Dr. Valeria Scotti, Section Editor for Science Metrics, given the relevance and impact of the topic covered by this article in the fast-changing scenario of artificial intelligence (AI), has decided to accept and publish it without a formal peer-review process.

References

- 1. Hornik K, Stinchcombe M, White H. Multilayer feedforward networks are universal approximators. Neural Networks. 1989;2(5):359-366. Online (Accessed June 2023).

- 2. DEEP MIND Mathematics, Machine Learning & Computer Science: The Universal Approximation Theorem. Online (Accessed June 2023).

- 3. Devlin J, Chang M-W, Lee K, Toutanova K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. 2018; arXiv:1810.04805 CrossRef (Accessed June 2023).

- 4. Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. 31st Conference on Neural Information Processing Systems (NIPS 2017). Online

- 5. Radford A, Narasimhan K, Saliman T, Sutskever I. Improving language understanding by generative pre-training. 2018. Online (Accessed June 2023).

- 6. Xie N, Yang J, Sun Q. A 13-year-old boy with subacute-onset spastic gait. JAMA Neurol. 2021;78(9):1151-1152. CrossRef PubMed

- 7. Wang D-Q, Feng L-Y, Ye J-G, Zou J-G, Zheng Y-F. Accelerating the integration of ChatGPT and other large-scale AI models into biomedical research and healthcare. MedComm Future Med. 2023;2(2):e43. CrossRef

- 8. Hitzler P, Eberhart A, Ebrahimi M, Sarker MK, Zhou L. Neuro-symbolic approaches in artificial intelligence. Natl Sci Rev. 2022;9(6):nwac035. CrossRef PubMed

- 9. Jacob A. Language Models as Agent Models. 2022. arXiv:2212.01681v1. CrossRef (accessed June 2023).