|

AboutOpen | 2022; 9: 15-20 ISSN 2465-2628 | DOI: 10.33393/ao.2022.2394 POINT OF VIEW |

|

A mixed reality telemedicine system for collaborative ultrasound diagnostics and ultrasound-guided interventions

ABSTRACT

In acute care settings (emergency room [ER], intensive care unit [ICU], operating room [OR]), it is common for inexperienced physicians to have problems making an ultrasound (US) diagnosis, so they have to consult an expert. In this article, we would like to present a methodology by which geographically independent expert physicians can engage during an US examination through virtual and augmented reality. The expert can view the set-up and the US images obtained by the examiner and discuss the clinical case over video chat. In turn, s/he can advise on the proper positioning of the US transducer on the patient with the help of a virtual US transducer. This technology can be used to obtain experts’ opinion from a remote location, whether it is inside a hospital or many miles away. Similarly, it can be used for distant training; whatever the indication, it will lead to improved care. We discuss two different use cases inside an ER: US for a Focused Assessment with Sonography in Trauma (FAST) examination and US for the insertion of a central venous catheter (CVC). Currently, we position this technology to Technology Readiness Level 2, as the concept is formulated and the practical application is identified. The basic properties of algorithms are defined and the basic principles are coded. We performed experiments with parts of the technology in an artificial environment. We asked a doctor, Arnaud Bosteels, to review this method and create this article together.

Keywords: Acute care, Mixed reality, Remote assistance, Telemedicine, Ultrasound examination, Ultrasound-guided interventions

Received: March 8, 2022

Accepted: April 7, 2022

Published online: April 20, 2022

AboutOpen - ISSN 2465-2628 - www.aboutscience.eu/aboutopen

© 2022 The Authors. This article is published by AboutScience and licensed under Creative Commons Attribution-NonCommercial 4.0 International (CC BY-NC 4.0).

Commercial use is not permitted and is subject to Publisher’s permissions. Full information is available at www.aboutscience.eu

Introduction

Since its introduction in the 1950s ultrasound (US) has been the most widely used imaging modality in the world and has application in nearly every medical specialty. But US examinations and especially US-guided interventions are hard to learn and need much training to perform at acceptable standards. This is a real challenge because, on the one hand, the training opportunities are sparse, and on the other hand, they imply practicing on real patients. Moreover, in most countries, the US training is not part of the standard medical curriculum. Therefore, most practitioners are looking for alternative education opportunities, but these are quite expensive and when they are booked they can easily be cancelled due to COVID-19. As a result, second opinions are a daily business in nearly every hospital.

For over 10 years, emergency rooms (ERs) across the European Union (EU) have been overcrowded and often lack experienced staff members (1). As published by the European Regional Office of the World Health Organization (WHO), EUs’ ER staff has to treat more than 100 million patients every year; often because of insufficient primary care (2).

Focused assessment with sonography in trauma

Over 7%-10% (up to 10 million) of these patients are treated annually due to unspecific stomach pain or abdominal traumas (3,4). One part of the standard diagnostic procedure for these ER patients is an US examination called “FAST” (Focused Assessment with Sonography in Trauma).

This FAST examination, however, is often performed by inexperienced physicians because in many EU and non-EU countries there is a lack of US experts in the emergency room (1,5,6). This leads to a substantial loss of medical quality as the success of US examinations correlates with the US experience of the physician (7,8). Sometimes physicians are unable to make a diagnosis, therefore an expert physician needs to be called. It takes time to get the expert to the emergency room and then repeat the FAST examination to ensure the diagnosis. With our method, the expert could join the examination from a remote location through virtual space and “see through the eyes” of an examiner while communicating with him/her in real time. Moreover, due to frequent overcrowding, ERs across Europe need to save time during diagnostic procedures while not losing quality. If the ERs do not save time or need to lower their quality the consequences will be:

• more mistreated patients

• more/higher stressed personnel

○ more errors in the ER

○ reduced personnel

• more costs due to false diagnosis and higher stationary costs

Central venous catheters

A common intervention in the ER is the placement of central venous catheters (CVC), also known as a central line, central venous line, or central venous access catheter. CVCs are commonly placed in a large vein of the neck (internal jugular vein), the upper chest (subclavian vein), or groin (femoral vein). CVCs are mainly used for a prolonged administration of drugs or fluids or if the administered substance is known to be toxic for smaller/peripheral veins. It can also be used for advanced hemodynamic monitoring. The catheters used are usually between 15 and 30 cm in length, made of silicone or polyurethane, and have one or multiple lumens.

To minimize the patient’s risk, before the insertion of the needle, the region of a suitable vein is inspected by US. If the result is positive, the skin is disinfected and a local anesthetic is applied. A hollow needle is then inserted into the vein. When the US image shows a good position of the needle inside a vein, a guidewire is advanced through the needle, over which a catheter is positioned into the vein (Seldinger technique).

The greatest risk within this procedure exists during the puncture of the vein. The risks depend on the site of access and the patient’s condition, but are essentially:

• accidental puncture of adjacent arteries with

○ large hematoma formation up to compression of adjacent structures (e.g., respiratory tract or lungs)

○ thromboembolism

• damage to adjacent nerves and other adjacent structures

• pleural injury, possibly with pneumothorax

• infections

As for the FAST diagnosis, the successful placement of a CVC correlates with the US experience of the physician.

Problem

As mentioned before, physicians in the ER are faced with the following problems:

1. In most countries, US education is not part of the standard medical training

2. ERs are overcrowded

3. US is hard to learn

4. US needs much training to perform at a high level of quality

5. Lack of experienced staff members

While the causes of the first two problems are essentially external to the ER—namely in the respective health system—and are purely organizational, problems 3, 4, and even 5 can be minimized by appropriate technical solutions.

Concept and methods

To solve or minimize the effects of the listed problems we designed a tele-assistant tool that will allow expert physicians to support less experienced physicians in the emergency room during diagnostic procedures like FAST and interventions like the placement of CVCs.

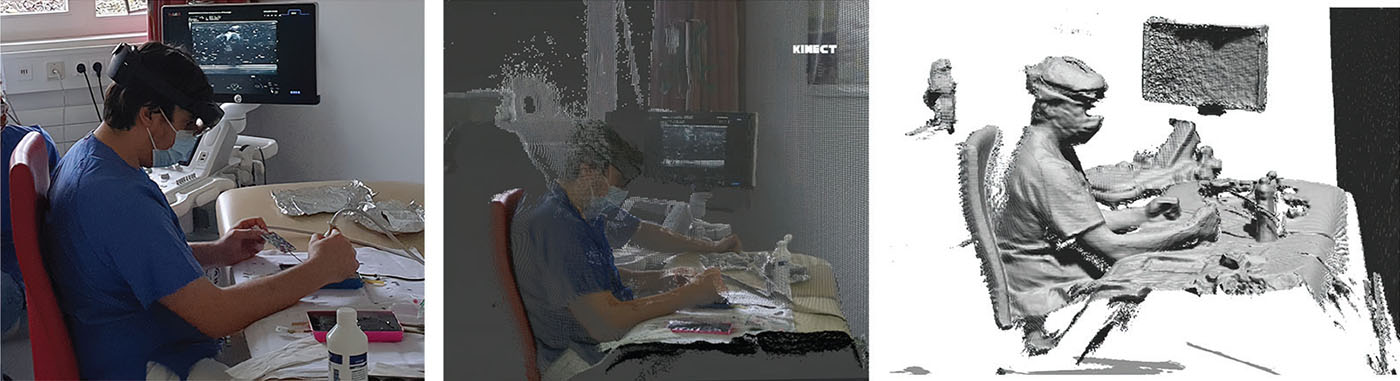

The ER physician performs the US in the emergency room while wearing an augmented reality (AR) headset (HoloLens 2). The emergency room is also equipped with a 3D scanner to create a 3D point cloud of the US examination.

The physician can see the US image projected on the patient through the HoloLens glasses, making it easier to focus on that area during the examination. S/he does not have to look at the US device monitor.

If the examining physician is uncertain about making a diagnosis, our system can be used to connect to a remote expert physician, to ask his/her opinion. The expert wears a virtual reality (VR) headset (e.g., HTC Vive) through which s/he can see the US image as well as the 3D point cloud of the patient and the ER. With the help of the VR glasses controller, the expert can place a virtual US transducer in the shared virtual space, therefore s/he can give advice and show how to scan correctly. With the use of HoloLens we could visualize the virtual transducer in the correct location on the patient, allowing the doctor in the emergency room to see the position suggested by the expert as shown in Figure 1. The expert can also connect to the HoloLens camera so that the examiner can see “through the examiner’s eyes.” They can also communicate via the HoloLens microphone and speaker (Fig. 2).

This method saves time as expert opinion can be obtained more quickly. It can take pressure off the shoulders of healthcare workers, reducing the chance of errors and resulting in fewer patients being mistreated. This also significantly reduces costs.

Our main technical objectives are to create a combined AR/VR scene and interaction platform with the following features:

• examiners can use AR glasses to have a real-time AR view of the US image and the patient;

• experts can use VR glasses to “see through the eyes” of the examiner;

• experts can create a virtual transducer that can be blended into the AR view of the assistant; to show assistants where to place the transducer and in which angle;

• both can talk to each other.

System components

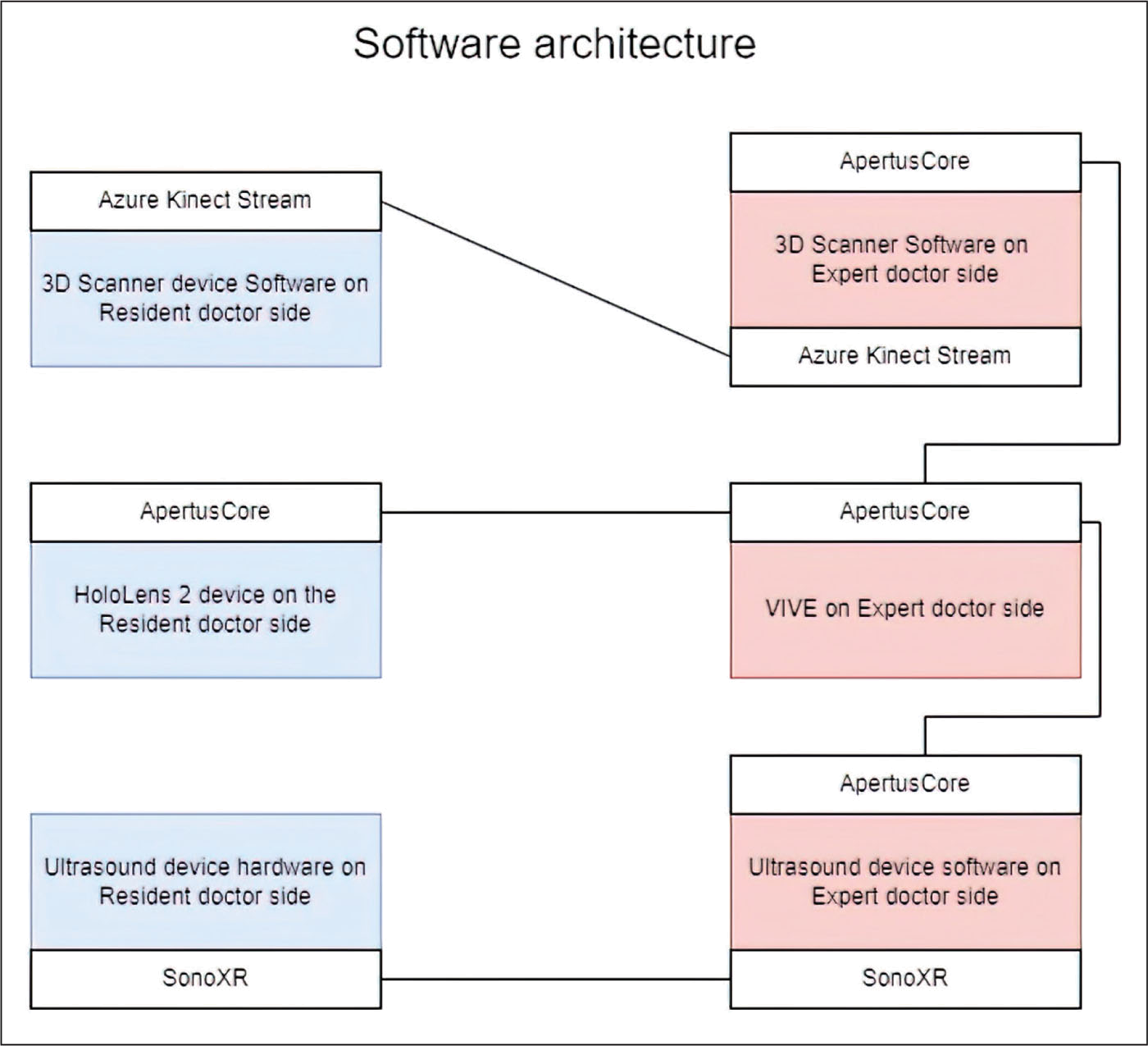

The proposed system consists of multiple components and plug-ins connected as seen in Figure 3. In the next subsections, more details about these components are provided.

HoloLens 2

Microsoft HoloLens 2 is a headset equipped with see-through holographic lenses and several different sensors and cameras, allowing a combination of real images and computer-generated images in the same field of view. Thanks to gesture, eye, and voice control capabilities and wireless technology, the device can be used hands-free and does not impede free movement. This can be particularly important in medical applications, where doctors need their hands to perform their tasks. This is why HoloLens is also used in other medical applications, including during surgery.

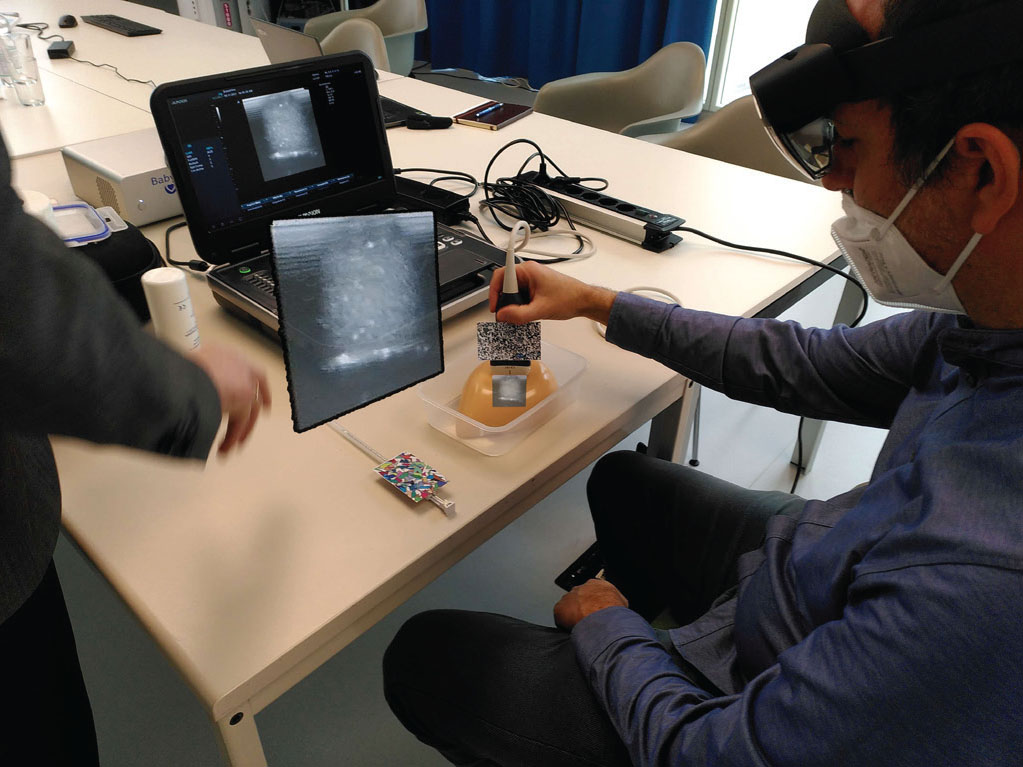

With HoloLens 2 it is possible to visualize the US video stream on the patient, just over the area of the body that is being scanned. Therefore the physician doesn’t have to look away at the US device’s screen, allowing them to focus on the US image while looking at the patient. The virtual US transducer controlled by the expert (apparent in the same field of view) will show the suggested position, improving the US image.

3D scanner device: Azure Kinect

Azure Kinect is a Kinect sensor made by Microsoft. It is equipped with a 12-megapixel RGB camera, a 1-megapixel depth camera. The device can also connect to Microsoft Azure cloud services, opening up a wealth of possibilities.

In the emergency room, a 3D scanner records what happens during the US examination. The data is processed later after it has been transmitted to the expert doctor’s site, then the 3D point cloud is created. Azure Kinect is the right tool for this, as its streaming capability makes it feasible.

ApertusCore

ApertusCore is a programming library, developed by SZTAKI, written in C++11, with the following features: modular, embeddable, platform-independent, and easily configurable. It contains basic software interfaces and modules for logging, event-handling, and loading plug-ins and configurations (9). ApertusCore is where the various plug-ins are connected. The streamed data from the 3D scanner is managed by ApertusCore, and the HoloLens and HTC Vive glasses are connected through it in the shared virtual space. The US data streamed through SonoXR is also fed into and connected to the virtual space.

ApertusVR is a plug-in connected to the ApertusCore that is responsible for the visualization, this allows us to create a shared VR world scene where the point cloud, the US video, and the other data are visualized.

As the ApertusCore platform is independent it is ideal to connect multiple devices and facilitate communication. ApertusVR can be used to create a shared virtual space for both HTC Vive and HoloLens VR glasses.

SonoXR

In most diagnostic cases and interventions the best practice for physicians would be to have a straight look into the human body. But current imaging modalities like computer tomography, magnetic resonance tomography, or US only provide an indirect look. This indirect look is a major challenge during diagnostic procedures and a real problem during interventions.

With the assistance of SonoXR, physicians can display the live US images through AR glasses (like HoloLens 2) at the point the image data are taken. This gives US users a kind of “superhero X-ray vision” that helps them see anatomical structures where they actually are. In addition, a virtual US display can be freely positioned in space to increase the ergonomics of the intervention. US-AR-based interventions are faster, less sensitive to errors, more efficient, and, as a result, reduce the risk of infections (10,11). An example of the view through the HoloLens 2 is shown in Figure 4 (with the use of a ”phantom”).

SonoXR consists of the

• SonoXR-server, running on a mini-PC (SonoXR transmission box; Windows 10) and

• SonoXR-client that runs on a HoloLens 2 (Windows Holographic)

The SonoXR-server collects the US screen image data in real time via an HDMI connection from a US scanner with 25-50 frames per second and up to 1920 × 1080 px. After cutting the US screen image data to the pure US image data content the final images are sent wireless to the SonoXR-client on the HoloLens 2.

On the HoloLens 2, the US images are shown

• directly beneath the US transducer to realize the above-mentioned “superhero X-ray vision”

• on a so-called “virtual screen” that can be placed by voice commands to any position in space the user wants to. Additionally, the virtual screen can be zoomed, rotated and basic image processing methods like changing brightness and saturation can be performed

Discussion

The designed tool using VR and mixed reality (MR) to visualize US images offers essential features that can resolve some of the issues encountered in ER departments across Europe. First, it allows to provide an answer to the recurrent problem of the need for experts’ opinions, as experts can be called in, they can visualize the images and the surroundings, and provide immediate feedback. This happens through the use of SonoXR technology who projects the US images on a virtual headset. Furthermore, an Azure Kinect 3D scanner provides an overview of the entire scene. Secondly, it can solve the issues of the scarcity of US training, as it can be used for remote learning, in a bidirectional manner. This means that on the one hand, the expert will help to improve the handling of the US probe by the less experienced user, with the use of his virtual US probe. Thanks to the HoloLens, the physician will see the proposed position of the probe on the patient. And on the other hand, it can also be used the other way around, namely, that the expert will give live training sessions to many people in remote locations by streaming his images to the VR headsets of the trainees.

However, despite the evident advantage of such an innovative way of training and collaboration, the essential “clinical proof” has to be provided for every planned use case and practitioners have to get used to the new tool. Therefore we plan to set up clinical trials to prove efficacy of the system. We focus on a “plug and play” approach by the physicians. The system must

• be quick and easy to set up: Physicians in the ER do not have the time to start and use complicated systems.

• have an intuitive user interface: The user interface needs to be so intuitive that even a physician who does not use the system every day will be able to use it.

• present durable and stable hardware: Due to fast and patient-focused work, the equipment in an ER has to be robust. The AR glasses are the critical point in the setup.

• have a low latency: The expert physician needs to see the ER and hear the assistant physician in real time.

Conclusions

In summary, we propose an innovative way to deal with the shortage of US experience throughout the ER departments, by using AR and VR, a 3D Azure Kinect scanner and the SonoXR technology. The system can provide a fast and low-cost way to improve healthcare around the world but the different possible use-cases still need the (clinical) validation.

Disclosures

Conflict of interest: The authors declare that they have no conflict of interest.

Financial support: The work described here was partly funded by the German Federal Ministry of Education and Research in the project “ARNI” (Augmented Reality Needle Intervention; funding reference: 16SV8152).

References

- 1. jayaprakash N, O’Sullivan R, Bey T, Ahmed SS, Lotfipour S. Crowding and delivery of healthcare in emergency departments: the European perspective. West J Emerg Med. 2009 Nov;10(4):233-239. PubMed

- 2. State of Health in the EU, Companion Report. Imprimerie Centrale in Luxembourg; 2017. Online (Accessed January 2022)

- 3. Benabbas R, Hanna M, Shah J, Sinert R. Diagnostic accuracy of history, physical examination, laboratory tests, and point-of-care ultrasound for pediatric acute appendicitis in the emergency department: a systematic review and meta-analysis. Acad Emerg Med. 2017 May;24(5):523-551. CrossRef PubMed

- 4. Javedani PP, Metzger GS, Oulton JR, Adhikari S. Use of focused assessment with sonography in trauma examination skills in the evaluation of non-trauma patients. Cureus. 2018 Jan 16;10(1):e2076. CrossRef PubMed

- 5. Leidi A, Rouyer F, Marti C, Reny JL, Grosgurin O. Point of care ultrasonography from the emergency department to the internal medicine ward: current trends and perspectives. Intern Emerg Med. 2020;15(3):395-408. CrossRef PubMed

- 6. Micks T, Sue K, Rogers P. Barriers to point-of-care ultrasound use in rural emergency departments. Can J Emerg Med. 2016 Nov;18(6):475-479. CrossRef. Epub 2016 Jul 25. PubMed.

- 7. Duanmu Y, Henwood PC, Takhar SS, et al. Correlation of OSCE performance and point-of-care ultrasound scan numbers among a cohort of emergency medicine residents. Ultrasound J. 2019;11(1):3. CrossRef PubMed

- 8. Costantino TG, Satz WA, Stahmer SA, Dean AJ. Predictors of success in emergency medicine ultrasound education. Acad Emerg Med. 2003 Feb;10(2):180-183. CrossRef PubMed

- 9. Apertus VR. ApertusCore. SZTAKI. [Accessed January 2022] Online.

- 10. Nguyen T, Plishker W, Matisoff A, Sharma K, Shekhar R. HoloUS: augmented reality visualization of live ultrasound images using HoloLens for ultrasound-guided procedures. Int J Comput Assist Radiol Surg. 2022;17(2):385-391. CrossRef PubMed

- 11. Rüger C, Feufel MA, Moosburner S, Özbek C, Pratschke J, Sauer IM. Ultrasound in augmented reality: a mixed-methods evaluation of head-mounted displays in image-guided interventions. Int J Comput Assist Radiol Surg. 2020;15(11):1895-1905. CrossRef PubMed